Against the backdrop of Mobile World Congress (MWC) in Barcelona, Enrico Salvatori, Senior Vice President and President of Qualcomm Europe, delivered a compelling address highlighting Qualcomm’s pivotal role in advancing artificial intelligence (AI).

Speaking at an event held alongside MWC, Salvatori outlined how AI is reshaping user interactions, driving hybrid computing models, and enabling powerful on-device processing. His address underscored Qualcomm’s leadership in making AI more efficient, accessible, and seamlessly integrated into everyday devices.

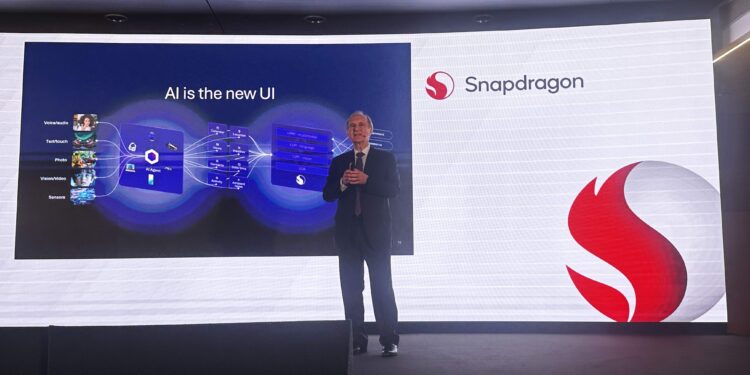

‘AI is the new UI’

One of the most striking takeaways from Salvatori’s keynote was his assertion that AI is becoming the new user interface. He emphasised how AI is fundamentally changing the way people interact with their devices, making interactions more intuitive and efficient.

“The device is personal. The device is private. The device contains our data, our apps, our information,” he said, emphasising the fundamental shift in how people access and manage information.

Rather than navigating through applications and files manually, AI-powered interfaces will enable users to retrieve information through natural language commands. “We don’t have to select which app, which data, or which file—we just ask, and the AI agent finds it for us,” Salvatori noted. This represents a move towards a more seamless, voice-assisted, and context-aware computing experience.

The shift to hybrid AI

Salvatori also addressed a major shift in AI computing—moving from a cloud-dependent model to a hybrid AI approach that balances processing between cloud servers and edge devices. Qualcomm’s advancements in chipset design have made it possible to run AI workloads directly on devices, reducing reliance on cloud computing.

“The training of AI models remains on the cloud, requiring significant computational power,” he explained. “But the inference processing is now happening more and more on the device.” This shift is driven by the growing capabilities of on-device neural processing units (NPUs), which deliver key benefits such as enhanced privacy, lower latency, and greater reliability. By keeping data on the device rather than constantly sending it to the cloud, AI-powered applications can deliver real-time performance while improving security and efficiency.

According to Salvatori, this hybrid model reflects a broader trend across the industry, where AI developers are working to optimise both computational efficiency and data security. “We are moving the AI processing into the device, where the data is already available,” he said.

Breakthroughs in AI model efficiency

AI models are also evolving rapidly. Traditionally, large language models (LLMs) required massive cloud-based infrastructure to function, but recent advancements are changing that. Salvatori highlighted the emergence of small language models (SLMs) that can deliver near-equivalent performance with significantly fewer computing resources.

He pointed to a major shift in model sizes, noting that in early 2023, leading AI models had 175 billion parameters, while by 2024, optimised models had shrunk to just 8 billion parameters. “This means we can now run powerful AI models directly on smartphones and PCs,” he said, referencing the latest Llama 3.1 and Mistral models that exemplify this breakthrough.

This leap forward is fuelled by a positive feedback loop between hardware and software. As chipsets become more advanced, developers are optimising AI models to run more efficiently on-device. “Because we increase the hardware, they optimise the software, and we can do even more,” Salvatori explained. Qualcomm’s AI-optimised chipsets are driving this innovation, allowing developers to deploy powerful AI solutions without needing cloud-based resources.

AI-powered devices are here

Salvatori made it clear that AI-powered devices are no longer theoretical they are already available. Qualcomm’s Snapdragon X Elite and X Plus platforms are prime examples of this transformation, enabling the next generation of AI-capable smartphones and PCs.

“We’re not talking about future concepts,” he said. “These devices are commercially available now.” The impact of AI is particularly visible in the PC market, where Qualcomm has introduced multiple tiers of AI-enabled computing platforms. “The segmentation of our chipset platforms reflects real market demand and traction,” he noted, underscoring the growing appetite for AI-enhanced computing in both consumer and enterprise applications.

Building an AI-first ecosystem

Beyond hardware, Qualcomm is investing in an AI-first development ecosystem. One of its major initiatives is the AI Hub—a platform designed to help application developers build and optimise AI-powered solutions for Qualcomm’s hardware.

“To facilitate the developers, we introduced AI Hub—a portal that allows them to select models, optimise applications for Qualcomm’s AI hardware, and test them in real time,” Salvatori explained. The platform has seen strong adoption, with over 1,150 developers actively using it.

A standout example from the AI Hub is Alarm, an AI model developed in collaboration with partners from Saudi Arabia. “Alarm is now running on AI-enabled PCs, demonstrating how hardware, AI models, and applications come together to create a complete ecosystem,” he said.

The future of AI at the edge

Salvatori’s keynote reinforced Qualcomm’s leadership in bringing AI closer to users—not just as a service, but as an integral part of personal devices. “AI is no longer a distant future—it is here today, changing the way we interact with technology,” Salvatori concluded. And with Qualcomm at the forefront, AI-powered devices are set to become even more intelligent, responsive, and seamlessly integrated into everyday life.

Discussion about this post